Warning – really long! I learned so much!

It was a hectic week for me. I co-chaired the Testing & Quality Track with Markus Gärtner, which meant I went to a lot of sessions in our track – no hardship! I also did an Agile Boot Camp session on “Everyone Owns Quality” with Janet Gregory, and a hands-on manual UI testing workshop with Emma Armstrong. (Slides for both those sessions are on the Agile 2015 Program). Here are some highlights of what I learned in sessions and conversations.

Note: Slides for many of these sessions are accessible via the Agile 2015 program online, I urge you to go look!

A Magic Carpet Ride: A business perspective on DevOps, Em Campbell-Pretty

Em Campbell-Pretty shared her experiences with improving quality in a giant enterprise data warehouse. I love her “just do it” attitude in the face of seemingly unsolvable problems (such as 400 pages of requirements!) I suspect her wonderful sense of humor helped her cope! Here’s a sample: “It would be fair to say that panic set in. Ah – opportunity!”

An example of Em’s creative approach: A program-wide retrospective helped stop the finger-pointing and led to improvements, but the Ops and Integration & Build teams were kept separate from the delivery team. When Em couldn’t get this changed, she appointed herself Product Owner of those teams.

Em noted that data warehouse developers often don’t come from the world of software engineering, so they don’t have the same understanding of source code control and the idea of frequent commits and integrations.

Some great tips from Em: Shrink the change, don’t try to boil the ocean. Make sure every team has time carved out to get familiar with a new process. Culture matters! Their onshore team didn’t even know each others’ names, plus there was an offshore team. Em started a “Unity Hour” to kick off every sprint by bringing everyone together to play games. She showed a hilarious video of a haka performed by one of the teams.

The results Em shared showed that all these efforts paid off, for example, huge reductions in time and effort to deploy a new release.

At this point, I had to leave to enjoy talking with the inimitable Howard Sublett for an Agile Amped video podcast (Subscribe on iTunes to the series – those videos are full of great people and great ideas!). You can find links to Em’s slides on the program.

Mob exploratory testing! with Maaret Pyhäjärvi

Maaret Pyhäjärvi led an enthusiastic group through a mob “deep testing” session. This is a terrific way to help people learn effective in-depth testing techniques and provide quick feedback.

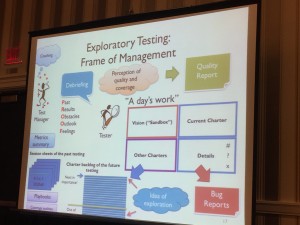

Use visuals to plan your day. Maaret uses a 2×2 model. Personally I like mind maps. Find what helps your brain the most. Plan to test fewer things, taking your time, going deep.

Our attempt at hands-on mob testing a real app showed this is a skill you have to practice. Mob testing follows mob programming practices. The navigator has to tell the driver – the person at the keyboard – what to do. The rest of the mob contributes their observations and ideas. Use a timer to have everyone switch roles at a set, short interval. Skills such as how to talk to the driver and where to focus your testing take deliberate practice. Take time to learn!

One important lesson was to ask questions, learn the terminology, and focus on the areas where feedback will be most valuable. My own team has tried mob testing once so far, and having the PO in the room helped a lot. Maaret says learning to test is like learning to drive a car. Don’t try to change gears in the middle of a turn – keep it simple, do one thing at a time, practice in a safe place.

Prototyping – Iterating Your Way to Glory Melissa Perri and Josh Wexler

In this session I learned more about how to design and test paper prototypes. If you use high-fidelity mockups on a computer, even if you created them quickly with a design tool, people fall into the “fear of killing your baby” syndrome. So much energy has gone into the idea, nobody wants to suggest changes. So, use pencil and paper. Color just distracts people – “I don’t like that blue”. Keep it simple.

One aha moment for me was the idea of creating goals for the persona you’re using. What would the user want to accomplish with the UI? We practiced sketching our prototypes for a mobile UI following a detailed narrative tailored to the persona, then role playing the persona and getting feedback on the design. Learning to ask open-ended questions is a skill I hope to improve. “What would you expect to happen when you click this button?” With these fast feedback cycle, we can learn so much before we write a line of code, and save a ton of time. Emma and I made this point in our own workshop.

Linda Rising, Stalwart Session

My sketch notes for this session are already posted. But in case you can’t interpret my drawings, here are a few highlights of the conversation.

Linda talked about how we resist change. For example, academics don’t want to modernize their programming courses: “The Cobol course is already in the can”.

Linda cited examples from Menlo Innovations on how to find joy through learning. She urged us to be our own scientists and do small experiments. There’s no one right answer, but patterns help. You’ve got to see it in action, don’t depend on “experts” who don’t know what feedback you’ll get.

Transparency is important in growing belief from the ground up. If there’s no trust, controlling doesn’t work. Don’t try to “sell” – instead, listen respectfully to what people have to say. You can change the environment. I’ve used the patterns from Linda and Mary Lynn Manns’ More Fearless Change, and I can attest to their helpfulness in trying small experiments and nudging baby steps of change.

Linda urged us to never give up on anybody. Don’t stereotype. Try, experiment, listen and learn, adapt. Fear less! We all come with biases and beliefs, but we have to continually change, people do shift beliefs. Linda recommended a podcast series and book, “You are not so smart”. That’s going on my podcast list!

Does the role of tester still exist? Juan Gabardini

Juan says we no longer identify ourselves by the activities we perform. I’d like that to be true, I do think we are moving that direction, but it seems hard for humans to dispense with labels. There are people out there who think “Testing isn’t technical, anyone can test”. Juan led us through considering “old school” testing in phased and gated environments versus how we test in agile development.

“Keep your eyes on the stars and your feet on the ground” urged Juan. Domain knowledge from contact to sell, from order to delivery is essential. He talked about “improving the last mile” by applying the scientific method. Use models such as Cynefin to understand the complexity of a feature. In our table groups, we came up with our own ideas for skills and characteristics that help with testing on agile projects.

Elisabeth Hendrickson, Stalwart

I was in the fishbowl for part of this session, which impacted my note-taking. Elisabeth shared how she moved from the title of “Director of Quality Engineering” to “Director of Engineering” by collaborating with four other directors so that they shared responsibility for global quality across all the teams working on the product, and removing obstacles for the teams. If developers have a problem, they ask the first director they see for help.

Elisabeth described how a few “explorers” joined delivery teams without testers and amped up their exploratory testing. They applied good development practices to the cloud. They automated solutions to lots of problems, for example, they use images to make every dev and test environment consistent. A message I got from this session was that we add value by providing something nobody else can provide.

One issue raised in the fishbowl was QA managers who resist a whole-team approach and don’t want to test early and often. Elisabeth said some of these managers identify as “the person at the end”, and it’s hard for them to let go of that role. We have to create safety for testers who are worried about changes.

A great quote from a participant: “Our CEO went to a conference and came back and said we should be more DevOps-y.” I didn’t note down Elisabeth’s answer to that!

Mob Programming – Jason Kerney

I don’t have good notes on this because I couldn’t hear the presenter very well. Luckily, this was recorded on video, and you can read the experience report paper that goes along with it! I appreciated hearing the experiences of Jason’s team. The paper is really helpful, with ideas such as doing study sessions. I wish my team would try mob programming for certain situations, such as when they’re doing something new where maybe only one developer has experience. We’re sure going to keep doing mob testing sessions when appropriate.

Be Brave! Try an Experiment! Linda Rising

I missed the beginning of this awesome session due to another video interview (this one along with Janet, by Craig Smith of InfoQ, I don’t know where to find that yet). But her slides are on the Agile 2015 program.

I learned the idea of continually trying small experiments from Linda years ago – it works! Linda noted that we aren’t doing scientific experiments, rather, we are doing trials, tinkering to see what works better. But, just for ease of conversation, we can still call them experiments.

We’re all born as natural scientists, but the way our schools work changes our focus from exploring and trying things – thinking “how” – to linear thinking, focusing on “what”. Agile lets us be babies again. Failure is OK! We can be more scientific. We need MANY experiments, and we don’t have the resources to do good science. Action is our best hope – not to find the truth or understand the why, but learn what works for us in our environment.

Linda explained confirmation bias – we only see information that confirms the beliefs we hold already. We also suffer cognitive dissonance, two disconfirming beliefs. To help overcome biases all humans have, talk out loud, draw on whiteboards, and get enough sleep. There was more, but I couldn’t sketch note fast enough.

Her message was clear, though. What can we do, to take some small action, to see whether it works for us or not? Not in theory, but really trying it. Do many small, simple, fast and frugal trials. Vary contexts, # participants, degree of enthusiasm, kind of project. Goal is learning about the thing, not proving it works for everyone. Re-test.

Be prepared to be surprised and learn even from “failure”. Don’t keep doing something because you’ve got so much invested in it. The investment is already gone. If you can’t finish your expensive steak, its cost makes no difference now. Involve everyone. Poke, sense, respond. Don’t look for answers, find ideas for trials.

If we have good outcomes, but “those people” (for example, managers) are in our way, don’t try to convince them. They also have biases supporting their beliefs. Data doesn’t convince people. Instead, show management the strategic value in a way that is easy to understand. Emphasize that learning happens regardless of outcome.

Performance Testing in Agile Contexts, Eric Proegler

Eric started by explaining some performance risks in areas such as capacity and reliability, and talked about the classes of bugs related to these. Please see his slides for this information – and for the cool photos from 1970s IBM sales literature. Most people do simulation testing right before release – when it’s too late to do anything about it.

My main takeaway is that we don’t have to do a perfect performance test where we have an environment exactly like production, and a load of “users” who behave exactly as real users behave. Eric called these the “illusion of realism”. Stop trying to test exactly what a user might do in a system that doesn’t exist yet. Instead ask – What’s a test I can do now, a test I can do in a day?

Small, fast, inexpensive tests done frequently as we iterate on our products will help us sniff out all kinds of performance issues. Don’t worry about imitating the prod environment. Isolation is more important than ‘real’. These tests need to be repeatable and reliable. Use simple workflows that are easy to recreate, avoid data caching effects. Be ready to troubleshoot when you find anomalies. We can build trust in these tests, recalibrate as necessary, use yesterday’s build to verify today’s results. Add these tests to your CI.

“Who needs ‘real’? Let’s find problems!” Do burst loads with no ramp, no think time, no pacing. Do 10 threads, 10 iterations each, 1 thread, 100 iterations. Do soak tests – 1 or 10 threads running for hours or days.

We can also use sapient techniques, something as simple as using a stopwatch, screen captures, videos, tools like Fiddler. One technique which we use on our own team is to put a load on the system in the background (we use Postman scripts driving our API for this) while just one or two people use the UI. You can use browser dev tools to measure performance.

You can also extend your existing automation. Add timers and log response times in your automated regression tests. This is something I’d like our team to try, since our tests are timed in CI. Watch for trends, be ready to drill down. Automation extends your senses, but doesn’t replace them.

You don’t have to test the whole system end to end to get valuable feedback. Login/logoff are expensive, and matter for everyone. Same with search functions. So focus on those. Think about user activities like road systems – are there highways?

Test at the API level. My teammate JoEllen Carter wrote Postman scripts to test API endpoints. She included assertions for the response time, and fails the test if the expected time is exceeded. Eric suggests using mocking stubs, service virtualization, built-in abstraction points to simulate in-progress.

Eric also suggested testing layers of the system, such as load testing web services directly. a test harness to measure response time to push and pull a message in a message bus. Use mocks and stubs, built-in abstraction points.

You can still do simulations before release, but in an agile project with short iterations, it pays to get feedback on performance with small, cheap, quick, repeatable, trustworthy tests.

Visual Testing Mike Lyles

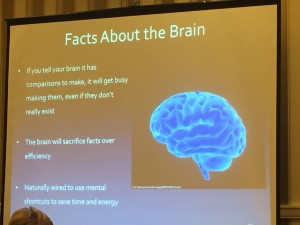

I mostly took pictures of Mike’s slides, so you should just look at his slide deck. Mike had all these cool examples of how our brains get confused and misled. Understanding how our brain works can help us see what’s hard to notice, and get over our biases such as inattentional blindness.

Our team (again thanks to JoEllen) has started using a visual diffing tool, Depicted, to help us identify subtle changes in UIs. We hope to use SauceLabs to get screenshots from each browser/version, and run those screens through Depicted to compare with gold masters and flag differences for further investigation.

Example Mapping, Matt Wynne

I missed this session AND the open jam session Matt did. But Markus Gärtner, who co-chaired the testing track with me, explained what he learned about example mapping from attending the session. I also took a look at Matt’s slide deck from an earlier conference that is on the same theme. It sounds to me like another way to have a story-level conversation among testers, programmers, product owners and other stakeholders to elicit examples, distinguish those from business rules, and write down questions we need to answer before we can finish the story.

I also got to eavesdrop on a lunchtime conversation between Matt and Ellen Gottesdiener. Ellen explained techniques from her book with Mary Gorman, Discover to Deliver, such as structured conversations. I don’t know what they concluded, but to me, it sounded like their techniques achieved similar goals, though Ellen’s book goes much deeper into many ways to help business stakeholders identify the most important business value.

I have a feeling that any conversation you have that involves writing on a whiteboard, on sticky notes, on an online mind mapping tool, or on the back of a napkin will help build shared understanding of how a feature should work. One big takeaway from Agile 2015 is that visuals help our brains learn new things. Next time you start talking to a teammate about a story, walk over to a whiteboard, or get out some paper – and invite others to join you.

Get out there and start doing your small, frugal, repeatable experiments! Please let me know how it goes!

3 comments on “(Some of) what I learned at Agile 2015”

[…] (Some of) what I learned at Agile 2015 – Lisa Crispin – https://lisacrispin.mystagingwebsite.com/2015/08/09/some-of-what-i-learned-at-agile-2015/ […]

[…] Source: lisacrispin.com/2015/08/09/some-of-what-i-learned-at-agile-2015 […]

[…] sería bueno leas este post en el blog de Javier, donde cuentan algo que también había leído en el blog de Lisa Crispin, sobre una dinámica que hizo Maaret Pyhäjärvi en el Agile 2015. Acá fue donde me inspiré […]